The team of researchers consists of professor Guenevere Chen of the University of Texas in San Antonio (UTSA), her doctoral student Qi Xia, and professor Shouhuai Xu of the University of Colorado (UCCS).

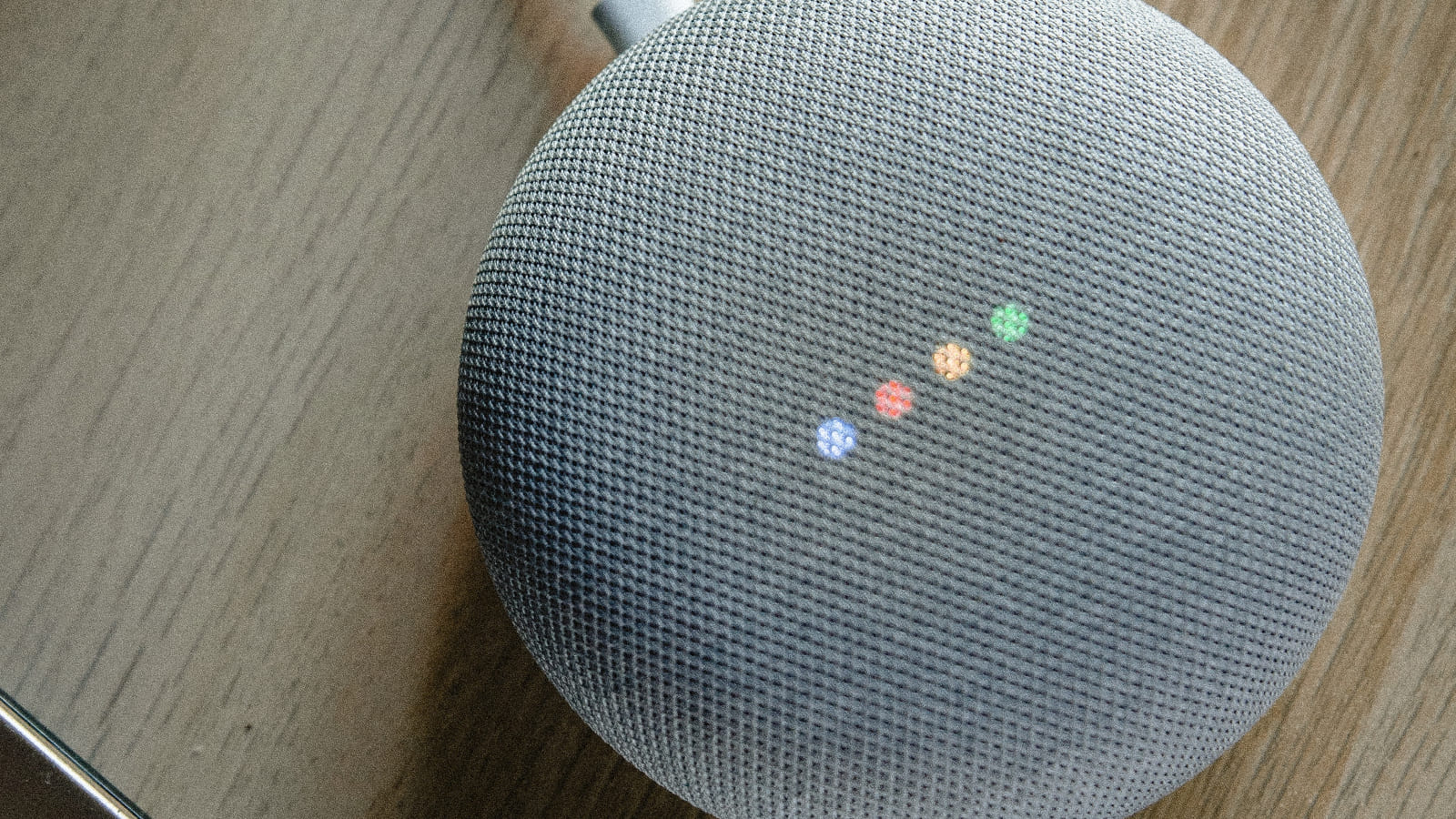

The team demonstrated NUIT attacks against modern voice assistants found inside millions of devices, including Apple's Siri, Google's Assistant, Microsoft's Cortana, and Amazon's Alexa, showing the ability to send malicious commands to those devices.

The main principle that makes NUIT effective and dangerous is that microphones in smart devices can respond to near-ultrasound waves that the human ear cannot, thus performing the attack with minimal risk of exposure while still using conventional speaker technology.

We've actually heard about these near ultrasound attacks before, but further work has been done on demonstrating how it can work. It does not require someone to be near-by to the listening device at all, as it can be transmitted inaudibly to the human ear during a Zoom call, or even via a YouTube video.

So yes, absolutely nothing special required for this to work. The bigger challenge to the attacker is finding someone who actually has smart speakers to respond with, and them having some or other automation that can be weaponised. But that said, almost everyone has a smartphone or two, and many have default Siri, Alexa or Google Assistant standing by to tell them what the weather forecast is for today. Many of those assistants can also perform phone actions like enable WiFi, open a specific website, disable screen lock, and much more...

If you can authenticate on your smart device using your own vocal fingerprint, it is recommended that you activate this additional security method. Chen also advised that users monitor their devices closely for microphone activations, which have dedicated on-screen indicators on iOS and Android smartphones. And just using earphones also cuts out that sound being able to travel to smart speakers.

See

Inaudible ultrasound attack can stealthily control your phone, smart speaker#

technology #

security #

smartassistants #

smartspeaker #

vulnerability

American university researchers have developed a novel attack which they named "Near-Ultrasound Inaudible Trojan" (NUIT) that can launch silent attacks against devices powered by voice assistants, like smartphones, smart speakers, and other IoTs.